The Paradigm Shift in Computing

I found my thoughts on a fundamental paradigm shift in how we compute: my handwritten scribbles on my Samsung Note while I was attending Jensen’s digital keynote during GTC 2022. I expanded it below.

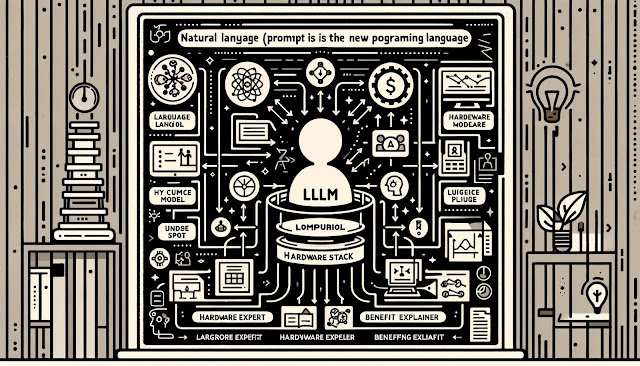

We are witnessing a fundamental paradigm shift in computing technology. This paradigm shift in computing is transforming from hardware and operating systems (OS) to application program interfaces (APIs) powered by large language models (LLM) in a world that embraces natural language programming. No Moore’s Law-driven improvement in processor speed or memory size will alter the fact that our current computing landscape is being turned upside down. A new era of computing has arrived — one where Large Language Models (LLMs) and API-based services usher in a new way to interact with and program computers, abstracting away the utilization of hardware and operating system stack.

So far, so good. Computing has had a technological lineage that operated in a bottom-up fashion: we first start with hardware, install an operating system, and then start writing applications using one of the many high-level languages such as Java, Python, or C++. This model for computing is something we have gotten used to over the years and, generally, has worked for us. What’s the issue? First of all, accessing and programming hardware directly or on an OS requires that developers understand a lot about the two. It’s labor-intensive and complicated on all fronts.

Here come the LLMs and the API-based services — one has to call an API, and magic happens. No cares about the hardware and the underlying OS, just powerful LLMs that have been trained on massive amounts of data that are capable of paraphrasing human text and being trained on other ambitious tasks. Underneath the hood, accelerators, such as GPUs and IPUs, are used to power these specialized models that are abstracted away from the end user. One of the most exciting developments of this new world is how we instruct these LLMs to perform a task. Instead of writing a program in a traditional programming language, we can instead use natural language, or prompts. Think of a prompt as simple and human-sounding instructions. Whether you write, ‘please write the blog post about the benefits of meditation’ or ‘translate this paragraph from English to Spanish’, you can instruct the LLM to perform the task. Such natural language programming democratizes access: non-technical users don’t need to become programmers given a year of boot camp, as the barrier to entry is far lower, as is the speed at which developers can try out ideas and iterate an idea as a simple prompt.

The other major shift in this new paradigm is the introduction of task-specific agents powered by LLMs. These agents have been trained on specific tasks, like answering questions, generating text, or analyzing tabular data. They can be accessed via their API and be combined with other algorithms to create more complex applications. You could have one agent to analyze customer feedback and many more to fulfill different functions — such as generating personalized recommendations or writing product descriptions. These agents can interact to produce the desired result for the user. The implications of this paradigm shift are enormous. They may even democratize computation and usher in a new era of innovation. Suddenly, everyone can build applications using natural language on top of an invisible machine that will abstract away all the details of the hardware and the OS that makes it tick.

You can concentrate on what problem you want to solve, not on how you’re solving it. To be sure, there are all sorts of difficulties to confront. There are security and privacy issues to grapple with. There’s the ethics involved in the use of LLMs and API-driven services more generally. There are questions about how interpretable or transparent a given model is. There are concerns about potential biases. But all such issues are part of the vital work in developing this new paradigm.

To conclude, the development of LLM-powered APIs, together with natural language programming, entails a paradigm shift in how we program computers. This shift benefits from rendering the complexity of the underlying hardware and operating system irrelevant, making computing much more accessible and instigating another unprecedented cycle of innovation. I believe that, in the coming years, this new paradigm will continue to progress and define the future of computing. Hopefully, it will unlock more opportunities and potentials to come.

As we shift towards this new paradigm in computing, it is important to take note of both the benefits and challenges that come with it. To make it easier to understand, I will summarize these points using bullet points::

Benefits with clarifications:

>> The Role of Hardware and OS: LLMs and APIs abstract away the complexity of the underlying hardware and OS. This is largely true, as developers using these tools often don’t need to manage or even understand the hardware directly. On the other hand, deep understanding and optimization at the hardware level are still crucial for developing and enhancing the performance of these LLMs themselves.

>> Programming with Prompts: Natural language prompts can be used instead of traditional programming languages. This is accurate and one of the revolutionary aspects of LLMs. For more complex applications, traditional programming still plays a crucial role in integrating and managing these prompts and their responses.

>> Democratization of Computing: This shift lowers the barrier to entry and democratizes access to powerful computing capabilities. This, in turn, leads to addressing the current limitations, such as the need for access to these technologies and the potential cost barriers associated with API calls.

>> Task-Specific LLM Agents: Task-specific LLM agents are an important trend. These specialized models enhance efficiency and effectiveness in specific domains but require careful training and management to ensure they perform as expected.

Challenges:

>> Security and Ethical Considerations: These areas are indeed crucial and complex challenges in the deployment of LLMs and need more than a passing mention to appreciate their significance fully.

>> Integration Challenges: While the ease of using LLMs is true, integrating these models into existing systems and workflows can be non-trivial. Companies may need to undertake significant infrastructure changes or develop new interfaces, which can be costly and time-consuming.

>> Impact on Employment: The shift towards natural language programming and automated systems could have profound impacts on employment within the tech industry. There may be a shift in the types of jobs available, with more emphasis on data management, model training, and oversight rather than traditional coding.

>> Cultural and Linguistic Biases: LLMs are trained on large datasets that often reflect the biases present in their training data. This can lead to biased outputs that might perpetuate stereotypes or disadvantage certain groups. Addressing these biases is critical for ethical deployment.

>> Environmental Impact: The environmental impact of training large-scale models on powerful hardware is a significant concern. These models require a lot of energy, contributing to carbon emissions. Sustainable practices in AI development and deployment will be increasingly important.

>> Regulatory Landscape: As LLMs become more integral to business and everyday life, the regulatory landscape will need to evolve to ensure these technologies are used safely and ethically. There could be new laws and regulations governing data use, model transparency, and accountability.

It's uncertain whether we'll achieve the development of an AGI in my lifetime. One thing I know for sure is that the way we interact with computers and the role they play in our daily lives has undoubtedly changed for the better. The advancements in computing technology and AI have revolutionized how we work, communicate, and access information. We can only imagine what the future holds, but it's exciting to think about the possibilities that lie ahead.

Comments

Post a Comment