GTC 2024 Announcements

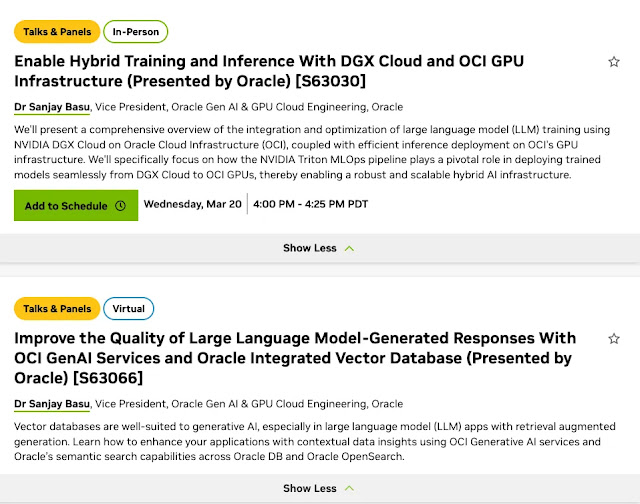

I had two sessions

At the GTC 2024 event, a significant announcement was the unveiling of the new Blackwell GPU architecture, designed to usher in a new era of AI and high-performance computing. This architecture introduces several key innovations aimed at dramatically improving AI model training and inference speeds and energy efficiency.

One of the highlights of Blackwell is its design, which comprises two “reticle-sized dies” connected via NVIDIA’s proprietary high-bandwidth interface, NV-HBI. This connection operates at up to 10 TB/second, ensuring full performance with no compromises. NVIDIA claims this architecture can train AI models four times faster than its predecessor, Hopper, and perform AI inference thirty times faster. Moreover, it reportedly improves energy efficiency by 2500%, albeit with a significant increase in power consumption, up to 1,200 watts per chip.

The Blackwell architecture brings forward six primary innovations: an extraordinarily high transistor count, the second-generation transformer engine, the 5th-generation NVLink, a new Reliability, Availability, and Serviceability (RAS) Engine, enhanced capabilities for “Confidential Computing” including TEE-I/O capability, and a dedicated decompression engine capable of unpacking data at 800 GB/second.

NVIDIA also introduced the NVIDIA GB200 NVL72, an entire 19" rack system that functions as a single GPU, combining 36 Grace CPUs and 72 Blackwell GPUs. This system offers 13.8 TB of HBM3e memory and peak AI inference performance of 1.44 EFLOPs. The system is designed for liquid cooling, which contributes to operational cost efficiency and allows denser rack configurations.

Beyond Blackwell, NVIDIA’s GTC 2024 announcements included advancements in computational lithography with cuLitho, new hardware for 800-Gbps transfer rates, and developments in NVIDIA’s Omniverse platform for digital twin simulations. At GTC 2024, NVIDIA made several announcements that extend beyond the Blackwell GPU, highlighting their advances in quantum computing, cloud services, and AI technologies. Here’s a summary of these significant developments:

Quantum Computing and Cloud Services

NVIDIA unveiled its Quantum Cloud, offering a robust platform for quantum computing research and development. This cloud service aims to increase access to quantum resources for scientists worldwide, featuring microservices that enable CUDA-cu developers to compile applications for quantum cloud execution. The platform supports a variety of powerful capabilities and third-party software integrations, such as the Generative Quantum Eigensolver, Classiq’s integration with CUDA-Q, and QC Ware Promethium for tackling complex quantum chemistry problems. NVIDIA’s Quantum Cloud is part of a broader initiative to foster the quantum computing ecosystem, partnering with over 160 entities, including major cloud services providers like Google Cloud, Microsoft Azure, and Oracle Cloud Infrastructure.

DGX Cloud and NVIDIA AI Infrastructure

NVIDIA’s collaboration with Microsoft has expanded to integrate NVIDIA’s AI and Omniverse technologies with Microsoft Azure, Azure AI, and Microsoft 365. This partnership brings the NVIDIA Grace Blackwell GB200 and Quantum-X800 InfiniBand to Azure, enhancing capabilities for advanced AI models. The collaboration also leverages NVIDIA DGX Cloud to drive innovation in healthcare through Azure, aiming to accelerate AI computing across various applications.

NVIDIA’s NIM (NVIDIA Inference Microservices) is a software platform launched to streamline the deployment of both custom and pre-trained AI models into production environments. It combines models with an optimized inferencing engine and packages them into containers, making them accessible as microservices. This approach significantly reduces the time and expertise required to deploy AI models, which traditionally could take weeks or months. NIM aims to accelerate the AI roadmap for companies by leveraging NVIDIA’s hardware for computational support and providing a software layer of curated microservices.

NIM offers a range of features designed to simplify and accelerate the deployment of AI applications across various domains. It includes prebuilt containers and Helm charts, support for industry-standard APIs, and domain-specific models leveraging NVIDIA CUDA libraries. Additionally, NIM utilizes optimized inference engines to ensure the best possible performance on NVIDIA’s accelerated infrastructure. It supports a wide range of AI models, including large language models (LLMs), vision language models (VLMs), and domain-specific models for tasks like speech, images, video, and more.

NIM is part of NVIDIA AI Enterprise, indicating its integration with NVIDIA’s comprehensive ecosystem for enterprise-grade AI deployments. This includes support for CUDA-X microservices for tasks like data processing and guardrails, as well as collaborations with major cloud service providers to ensure that NIM microservices can be deployed across a wide range of infrastructures. This collaboration extends to data platform providers and infrastructure software platforms, highlighting NVIDIA’s effort to make generative AI microservices widely accessible and easy to integrate into enterprise applications. For those interested in leveraging NVIDIA’s AI capabilities, NIM represents a significant step forward in simplifying and accelerating the deployment of AI models into production, enabling businesses to more easily become AI-driven enterprises.

Healthcare & Life Sciences

NVIDIA made several significant announcements in the Healthcare and Life Sciences sectors, focusing on the use of AI to revolutionize drug discovery, genomics, and the development of new technologies for accessing AI models. One of the key highlights was the expansion of the NVIDIA BioNeMo™ generative AI platform for drug discovery. This platform now includes foundation models capable of analyzing DNA sequences, predicting changes in protein structures due to drug molecules, and determining cell functions based on RNA. The expansion aims to simplify and accelerate the training of models on proprietary data, facilitating the deployment of models for drug discovery applications.

NVIDIA introduced several new generative AI models available through the BioNeMo service. These models are designed to assist researchers in various tasks, such as identifying potential drug molecules, predicting the 3D structure of proteins, and optimizing small molecules for therapeutic purposes. The models include AlphaFold2, which significantly reduces the time required to determine a protein’s structure, and DiffDock, which predicts the docking interaction of small molecules with high accuracy.

NVIDIA’s announcements also highlighted collaborations with major cloud service providers like Amazon Web Services, Oracle (OCI), and Microsoft Azure to enhance access to AI models through NVIDIA Inference Microservices (NIMs). These optimized cloud-native “microservices” aim to accelerate the deployment of generative AI models across different environments, from local workstations to cloud services. NIMs are expected to expand the pool of developers capable of deploying AI models by simplifying the development and packaging process.

NVIDIA Earth-2 Climate Platform

Another notable announcement was the launch of NVIDIA Earth-2, a cloud platform designed to address climate change-induced economic losses. Earth-2 utilizes NVIDIA CUDA-X microservices to offer high-resolution weather and climate simulations, significantly improving the efficiency and resolution of current models. The platform is expected to revolutionize weather forecasting and climate research, with early adopters already planning to implement Earth-2 for more accurate and efficient predictions.

DRIVE Thor and Transportation Innovations

NVIDIA introduced DRIVE Thor, a centralized car computer for next-generation vehicles, including electric vehicles, autonomous trucks, and robotaxis. DRIVE Thor aims to enhance cockpit features and autonomous driving capabilities on a centralized platform, promising significant improvements in performance and safety for the transportation industry. This development is part of NVIDIA’s broader efforts to transform transportation through AI and computing innovations.

These announcements from NVIDIA at GTC 2024 underscore the company’s ongoing commitment to advancing computing, AI, and technology across a variety of fields. From quantum computing and cloud services to climate simulation and autonomous vehicles, NVIDIA continues to push the boundaries of what’s possible, setting new standards for innovation and efficiency in the tech industry.

Telecommunications

NVIDIA announced new services and solutions targeting the telecommunications sector, particularly focusing on advancements in AI technologies for 5G and the upcoming 6G networks, as well as partnerships aimed at enhancing telco-specific AI applications.

One of the key announcements was the introduction of the 6G Research Cloud platform. This platform is designed to apply AI to the Radio Access Network (RAN), featuring tools such as the Aerial Omniverse Digital Twin for 6G, the Aerial CUDA-Accelerated RAN, and the Sionna Neural Radio Framework. These tools are aimed at accelerating the development of 6G technologies, facilitating connections between devices and cloud infrastructures to support a hyper-intelligent world of autonomous vehicles, smart spaces, and more immersive educational experiences. The initiative has garnered support from industry giants like Ansys, Arm, Fujitsu, and Nokia, among others.

In addition to the technological advancements, NVIDIA showcased how AI is shaping a new era of connectivity for telecommunications. AI-driven technologies are transforming operations and enabling the construction of efficient and fast 5G networks. This includes the use of metaverse digital twins for predictive maintenance and network performance optimization, as well as the development of new business models leveraging 5G and edge services. NVIDIA’s collaborations, such as with Fujitsu on a 5G virtualized radio access network (vRAN) solution, underscore the role of accelerated computing and AI in the future of connectivity.

NVIDIA and ServiceNow have expanded their partnership to introduce telco-specific generative AI solutions aimed at elevating service experiences. These solutions provide streamlined and rapid incident management, leveraging generative AI to decipher technical jargon and distill complex information. This partnership is focused on solving the industry’s biggest challenges and driving global business transformation for telcos.

Comments

Post a Comment